Here's the relevant graf:

“Hypertext” is the term invented by Ted Nelson in 1965 to describe text that, unlike traditional linear writing, spreads out in a network of nodes and links. Nelson’s idea hearkened back to Vannevar Bush’s celebrated “As We May Think,” paralleled Douglas Engelbart’s pioneering work on networked knowledge systems, and looked forward to today’s Web.

This original conception of hypertext fathered two lines of descent. One adopted hypertext as a practical tool for organizing and cross-associating information; the other embraced it as an experimental art form, which might transform the essentially linear nature of our reading into a branching game, puzzle or poem, in which the reader collaborates with the author. The pragmatists use links to try to enhance comprehension or add context, to say “here’s where I got this” or “here’s where you can learn more”; the hypertext artists deploy them as part of a larger experiment in expanding (or blowing up) the structure of traditional narrative.

These are fundamentally different endeavors. The pragmatic linkers have thrived in the Web era; the literary linkers have so far largely failed to reach anyone outside the academy. The Web has given us a hypertext world in which links providing useful pointers outnumber links with artistic intent a million to one. If we are going to study the impact of hypertext on our brains and our culture, surely we should look at the reality of the Web, not the dream of the hypertext artists and theorists.

The other big problem with Carr’s case against links lies in that ever-suspect phrase, “studies show.” Any time you hear those words your brain-alarm should sound: What studies? By whom? What do they show? What were they actually studying? How’d they design the study? Who paid for it?

To my surprise, as far as I can tell, not one of the many other writers who weighed in on delinkification earlier this year took the time to do so. I did, and here’s what I found.

You recall Carr’s statement that “people who read hypertext comprehend and learn less, studies show, than those who read the same material in printed form.” Yet the studies he cites show nothing of the sort. Carr’s critique of links employs a bait-and-switch dodge: He sets out to persuade us that Web links — practical, informational links — are brain-sucking attention scourges robbing us of the clarity of print. But he does so by citing a bunch of studies that actually examined the other kind of link, the “hypertext will change how we read” kind. Also, the studies almost completely exclude print.

If you’re still with me, come a little deeper into these linky weeds. In The Shallows, here is how Carr describes the study that is the linchpin of his argument:

In a 2001 study, two Canadian scholars asked seventy people to read “The Demon Lover,” a short story by the modernist writer Elizabeth Bowen. One group read the story in a traditional linear-text format; a second group read a version with links, as you’d find on a Web page. The hypertext readers took longer to read the story ,yet in subsequent interviews they also reported more confusion and uncertainty about what they had read. Three-quarters of them said that they had difficulty following the text, while only one in ten of the linear-text readers reported such problems. One hypertext reader complained, “The story was very jumpy…”Sounds reasonable. Then you look at the study, and realize how misleadingly Carr has summarized it — and how little it actually proves.

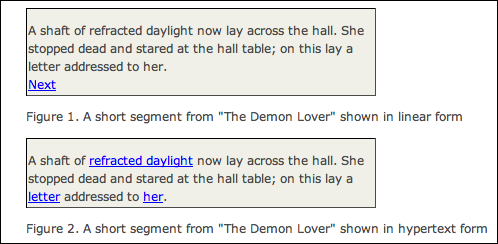

The researchers Carr cites divided a group of readers into two groups. Both were provided with the text of Bowen’s story split into paragraph-sized chunks on a computer screen. (There’s no paper, no print, anywhere.) For the first group, each chunk concluded with a single link reading “next” that took them to the next paragraph. For the other group, the researchers took each of Bowen’s paragraphs and embedded three different links in each section — which seemed to branch in some meaningful way but actually all led the reader on to the same next paragraph. (The researchers didn’t provide readers with a “back” button, so they had no opportunity to explore the hypertext space — or discover that their links all pointed to the same destination.)

Here’s an illustration from the study:

Bowen’s story was written as reasonably traditional linear fiction, so the idea of rewriting it as literary hypertext is dubious to begin with. But that’s not what the researchers did. They didn’t turn the story into a genuine literary hypertext fiction, a maze of story chunks that demands you assemble your own meaning. Nor did they transform it into something resembling a piece of contemporary Web writing, with an occasional link thrown in to provide context or offer depth.Rosenberg also has a nice follow-up post on what it is that links actually end up doing in articles on the web.

No, what the researchers did was to muck up a perfectly good story with meaningless links. Of course the readers of this version had a rougher time than the control group, who got to read a much more sensibly organized version. All this study proved was something we already knew: that badly executed hypertext can indeed ruin the process of reading. So, of course, can badly executed narrative structure, or grammar, or punctuation.