Everywhere, the winds whispered and moaned in their secret Ice Age language.

Monday, December 27, 2010

Quote of the week

The New York Times reporting on the post-Christmas blizzard that just ravaged the North-East United States:

Monday, December 20, 2010

My contribution to the Google Ngrams show

Google released its Ngrams tool two weeks ago and everyone seems to have tried something or the other with it. (Some folks also pointed out wisely that we need to make sure that the data this visualizations rely upon is accurate -- or at least we need to find a way to estimate its accuracy.)

But anyway, back to my point. In our Social Theory class this semester, we read David Harvey's "The Condition of Postmodernity." Harvey's thesis in this book is that what we call postmodernity is a specific cultural manifestation of the changes in the economic framework. The structure of capitalism, he thinks (like a good Marxist), changed in the 1970s and went from a regime of Fordism combined with Keynesianism to a regime of flexible accumulation (looser labor laws, easier capital flows, etc.). Postmodernism is a specific cultural outshoot -- the superstructure -- of these changes in the base.

So. I put in "productivity" and "efficiency" into the Ngram and this is the graph that came up: (click on the figure to see a bigger version)

Interesting, isn't? I consider "efficiency" perhaps to be the word associated with Fordism. "Productivity," used so much in corporations today, seems to me more of an instance of the new post-1970s economy of flexible accumulation (at least according to Harvey).

The number of instances of "efficiency," peaks around 1920 and then falls (although it rebounds and keeps fairly steady). The 1970s are the period when flexible accumulation starts to replace Fordism.

On the other hand, "productivity" is pretty non-existent, starts to increase around 1960 and peaks a little after 1980 and then drops down again. Again, this seems to vaguely conform to the Fordism to flexible accumulation shift.

I am not really going anywhere with this and I suspect I may be getting something wrong as well. Still, it's suggestive though, isn't it? Worth investigating.

Anyone have any suggestions, ideas, explanations?

But anyway, back to my point. In our Social Theory class this semester, we read David Harvey's "The Condition of Postmodernity." Harvey's thesis in this book is that what we call postmodernity is a specific cultural manifestation of the changes in the economic framework. The structure of capitalism, he thinks (like a good Marxist), changed in the 1970s and went from a regime of Fordism combined with Keynesianism to a regime of flexible accumulation (looser labor laws, easier capital flows, etc.). Postmodernism is a specific cultural outshoot -- the superstructure -- of these changes in the base.

So. I put in "productivity" and "efficiency" into the Ngram and this is the graph that came up: (click on the figure to see a bigger version)

Interesting, isn't? I consider "efficiency" perhaps to be the word associated with Fordism. "Productivity," used so much in corporations today, seems to me more of an instance of the new post-1970s economy of flexible accumulation (at least according to Harvey).

The number of instances of "efficiency," peaks around 1920 and then falls (although it rebounds and keeps fairly steady). The 1970s are the period when flexible accumulation starts to replace Fordism.

On the other hand, "productivity" is pretty non-existent, starts to increase around 1960 and peaks a little after 1980 and then drops down again. Again, this seems to vaguely conform to the Fordism to flexible accumulation shift.

I am not really going anywhere with this and I suspect I may be getting something wrong as well. Still, it's suggestive though, isn't it? Worth investigating.

Anyone have any suggestions, ideas, explanations?

Thursday, December 16, 2010

Cultural relativism

May I just say that I agree with, pretty much, every word of this?

That said, I also like this part of Clifford James' post on the Greater Humanities:

I'm very interested in the humanities, particularly archaeology, which is my profession. But I have no interest in TV game shows, even though I know that they're extremely popular. Why is that?

A cultural idealist will reply that it's because I have good taste: historical humanities are inherently and objectively more interesting and worthwhile than TV game shows. But I'm no cultural idealist. I'm a cultural and aesthetic relativist. This means that I acknowledge no objective standards for the evaluation of works of art. There are no definitive aesthetic judgements, there is only reception history. There is no objective way of deciding whether Elvis Presley is better than Swedish Elvis impersonator Eilert Pilarm. It is possible, and in fact rather common, to prefer Lady Gaga to Johann Sebastian Bach. De gustibus non est disputandum.

This means that I can't say that it would be better if everyone who likes football took up historical humanities instead. Both football and historical humanities are fun and of no practical use. Which one we choose is a matter of individual character and subcultural background.

This is important. We do a lot of what we do because of our subcultural background, which is largely composed of class background. I am a second-generation academic from the middle class. I do middle class things such as reading novels, skiing on the golf course in the winters, writing essays like this and studying historical humanities. If my parents had been workers, then I would most likely have been doing quite different things. And that would have been fine too. One thing is as good as another provided that it is fun.

[snip]

There are those who claim that the historical humanities fill an important purpose in reinforcing democracy. Sometimes their rhetoric suggests that the main task of the humanities is indeed to keep people from becoming Nazis and repeating the Holocaust. To those who claim this ability for our disciplines, I can only say "Show me the evidence". There is in fact nothing about the humanities that automatically makes its results politically palatable. The non-humanities people I know are equally good liberals as the humanities majors. Actually, the most brown-shirted individual I have ever spoken to was an archaeology post-grad for a while in the 90s.

In the invitation to the seminar, we were warned about "heritage populism without reflection or depth". But in my experience, many of the taxpayers who fund us actually really want to enjoy the cultural heritage in a populist manner without any great reflection or depth. They understand that Late Medieval murals painter Albertus Pictor and Conan the Barbarian are not the same kind of character. But they consume stories about both for the same reason: for enjoyment's sake. This means that it's our job to make humanistic knowledge available on all levels and to meet every member of the audience where they stand. Our task, unlike that of historical novelists, is to tell true stories - that also have to be exciting and fun. Because there really is no practical use to the humanities. And an activity that is neither useful nor fun has no value whatsoever.

"... culture and heritage suffer under a utilitarian economical mode of thought that focuses on which museums, heritages [this probably refers to archaeological sites], interpretations and blogs can attract the most visitors. Such a bestsellerism can give rise to trivialised and unreflected messages." (from the invitation)

"Does the heritage sector flatten perspectives by presenting the heritage in a simple, measurable and manageable package?" (from the invitation)

This suggests a kind of punk-rock attitude where a defiant humanities scholar says "I'm not gonna provide anything measurable or manageable or trivial or popular!" And sure, that is up to the individual. But if we are to expect a monthly salary from the taxpayers, then I think we will have to accept that they want to be able to measure and manage our product. How else are they supposed to know if it's worth it to continue paying our salaries? And they want us to produce stuff that, within the realm of solid real-world humanities scholarship, is at least as much fun as a TV game show or Conan the Barbarian.

That said, I also like this part of Clifford James' post on the Greater Humanities:

The Greater Humanities are 1) interpretive 2) realist 3) historical 4) ethico-political.Of course, there's always a tension between being interpretative and not prone to explanations with "replicable causes" with being able to get funding. But that's another topic for another day.

- Interpretive. (read textual and philological, in broad, more than just literary, senses) Interpretive, not positivist. Interested in rigorous, but always provisional and perspectival, explanations, not replicable causes.

- Realist. (not “objective”) Realism in the Greater Humanities is concerned with the narrative, figural, and empirical construction of textured, non-reductive, multi-scaled representations of social, cultural, and psychological phenomena. These are serious representations that are necessarily partial and contestable…

- Historical. (not evolutionist, at least not in a teleological sense) The knowledge is historical because it recognizes the simultaneously temporal and spatial (the chronotopic) specificity of…well… everything. It’s evolutionist perhaps in a Darwinian sense: a rigorous grappling with developing temporalities, everything constantly made and unmade in determinate, material situations, but developing without any guaranteed direction.

- Ethico-political. (never stopping with an instrumental or technical bottom line…) It’s never enough to say that something must be true because it works or because people want or need it. Where does it work? For whom? At whose expense? Contextualizing always involves constitutive “outsides” that come back to haunt us– effects of power.

Saturday, December 11, 2010

The Master Switch: What will become of the internet?

David Leonhardt has a nice review of Tim Wu's new book "The Master Switch: The Rise and Fall of Information Empires", which made me want to run out and buy it. Here's the main graf:

Wu recently got into a tiff with other theorists over the meaning of the word "monopoly." To know more, click here, here and here (and there are plenty of other links on the pages themselves).

AT&T is the star of Wu’s book, an intellectually ambitious history of modern communications. The organizing principle — only rarely overdrawn — is what Wu, a professor at Columbia Law School, calls “the cycle.” “History shows a typical progression of information technologies,” he writes, “from somebody’s hobby to somebody’s industry; from jury-rigged contraption to slick production marvel; from a freely accessible channel to one strictly controlled by a single corporation or cartel — from open to closed system.” Eventually, entrepreneurs or regulators smash apart the closed system, and the cycle begins anew.What, then, of the internet? Well, the answer is that it's up to us, as consumers and interested parties to make sure that the features of the internet that we love, its openness, ease of access and its culture of linking and transparency, survive the changes in its structure that are sure to come by.

The story covers the history of phones, radio, television, movies and, finally, the Internet. All of these businesses are susceptible to the cycle because all depend on networks, whether they’re composed of cables in the ground or movie theaters around the country. Once a company starts building such a network or gaining control over one, it begins slouching toward monopoly. If the government is not already deeply involved in the business by then (and it usually is), it soon will be.

Wu recently got into a tiff with other theorists over the meaning of the word "monopoly." To know more, click here, here and here (and there are plenty of other links on the pages themselves).

Thursday, December 9, 2010

Tax cuts for the rich

I mostly don't write about politics (for a lot of reasons, but mostly because most people have already said it better!) but this paragraph in David Leonhardt's "Economic Scene" column struck me as interesting:

And yet, just read that opening paragraph I quoted above. Even a simple, diplomatic, faux-objective rendering of the recent tax-cuts deal makes it very clear: what the Republicans wanted was for richer people (or to be more technical, those making more than 250,000 a year. And the estate tax cut will only benefit multi-millionaires.). How on earth will the Republicans spin this? One would think that the Democrats can make hay with this with some good old-fashioned rabble-rousing against-the-fat-cats type of populism. But no. Listen to this missive from Kevin Drum, about how the deal is spun in Virginia:

I have no stake in this battle, really. But it strikes me that something interesting is going on here. Republican voters in the US routinely list the deficit as their main concern. Making the Bush tax cuts permanent, the Republicans' main priority, will increase the deficit anyway. (And to be fair, Democrats are fine with making the tax cuts that apply to family incomes less than 250k permanent as well). So how does the perception that the tax cuts are good even if they increase the much-cared for deficit come about?

One theory could be the Marxist notion of "false consciousness," that voters are in the grip of an ideology (in the Marxist sense). I find these explanations unsatisfying.

My guess is that the deficit -- a technocratic concept if there was one -- has fused into the notions of responsibility that constitute these voters' identities. So these voters tolerate higher deficits if it is for "responsible" reasons but not so much if the deficit expands in response to an extension of unemployment benefits (even if they see unemployment all around them). I think this is an issue where some qualitative research -- in the form of extensive, unstructured interviews with Republican voters -- might help. How has the deficit seeped into their very selves? How do they understand themselves in terms of this deficit? Anyone know of any research on this?

-------------------------

Postscript: I began to think of George Lakoff's may be on to something after all -- even if Lakoff's theory is too much like the false consciousness in the guise of cognitive science. Lakoff says voters typically think of the state in terms of the family metaphor: the nation-as-family metaphor. A state with a deficit therefore corresponds to a family living beyond its means, a classic sign of irresponsibility. That explains the deficit but what about support for the tax cuts? Anyone know of any other possible explanations? Any theories of political identity-formation that can be applied here?

Mr. Obama effectively traded tax cuts for the affluent, which Republicans were demanding, for a second stimulus bill that seemed improbable a few weeks ago. Mr. Obama yielded to Republicans on extending the high-end Bush tax cuts and on cutting the estate tax below its scheduled level. In exchange, Republicans agreed to extend unemployment benefits, cut payroll taxes and business taxes, and extend a grab bag of tax credits for college tuition and other items.Now consider this: Leonhardt is a reporter for the Times. As such, when he writes an op-ed type piece, it is mostly in the restrained way that the "Analysis" sections for the paper are written (see here, for example). He doesn't -- and I would think, can't -- write in the way the Times op-ed writers write. (For instance, see Paul Krugman's latest, which has a decidedly -- and probably justifiably -- apocalyptic tone.)

And yet, just read that opening paragraph I quoted above. Even a simple, diplomatic, faux-objective rendering of the recent tax-cuts deal makes it very clear: what the Republicans wanted was for richer people (or to be more technical, those making more than 250,000 a year. And the estate tax cut will only benefit multi-millionaires.). How on earth will the Republicans spin this? One would think that the Democrats can make hay with this with some good old-fashioned rabble-rousing against-the-fat-cats type of populism. But no. Listen to this missive from Kevin Drum, about how the deal is spun in Virginia:

I hate to say this but I do have my ear to the ground with a lot of "regular" folks and the way this impending "deal" is being described by most of them is that Republicans are pushing to keep the tax cuts which create jobs and the Democrats are pushing to extend unemployment benefits so lazy people can sit on their asses a while longer and live off those of us who work hard.In other words, it is not Republicans who will end up being defensive about the deal but Democrats! Even when the concessions that the Democrats fought for will benefit more people -- and more importantly, people in need -- as well as give the economy a much-needed stimulus. Here is a graphic that shows the number of people who will benefit because of the concessions the Democrats fought for (link):

I have no stake in this battle, really. But it strikes me that something interesting is going on here. Republican voters in the US routinely list the deficit as their main concern. Making the Bush tax cuts permanent, the Republicans' main priority, will increase the deficit anyway. (And to be fair, Democrats are fine with making the tax cuts that apply to family incomes less than 250k permanent as well). So how does the perception that the tax cuts are good even if they increase the much-cared for deficit come about?

One theory could be the Marxist notion of "false consciousness," that voters are in the grip of an ideology (in the Marxist sense). I find these explanations unsatisfying.

My guess is that the deficit -- a technocratic concept if there was one -- has fused into the notions of responsibility that constitute these voters' identities. So these voters tolerate higher deficits if it is for "responsible" reasons but not so much if the deficit expands in response to an extension of unemployment benefits (even if they see unemployment all around them). I think this is an issue where some qualitative research -- in the form of extensive, unstructured interviews with Republican voters -- might help. How has the deficit seeped into their very selves? How do they understand themselves in terms of this deficit? Anyone know of any research on this?

-------------------------

Postscript: I began to think of George Lakoff's may be on to something after all -- even if Lakoff's theory is too much like the false consciousness in the guise of cognitive science. Lakoff says voters typically think of the state in terms of the family metaphor: the nation-as-family metaphor. A state with a deficit therefore corresponds to a family living beyond its means, a classic sign of irresponsibility. That explains the deficit but what about support for the tax cuts? Anyone know of any other possible explanations? Any theories of political identity-formation that can be applied here?

Tuesday, December 7, 2010

Libraries, Google and the Internet

Robert Darnton has an excellent piece in the New York Review of Books on the future of academic presses and libraries and whether the democratic accessibility promised by the internet will ever come to pass:

Google represents the ultimate in business plans. By controlling access to information, it has made billions, which it is now investing in the control of the information itself. What began as Google Book Search is therefore becoming the largest library and book business in the world. Like all commercial enterprises, Google’s primary responsibility is to make money for its shareholders. Libraries exist to get books to readers—books and other forms of knowledge and entertainment, provided for free. The fundamental incompatibility of purpose between libraries and Google Book Search might be mitigated if Google could offer libraries access to its digitized database of books on reasonable terms. But the terms are embodied in a 368-page document known as the “settlement,” which is meant to resolve another conflict: the suit brought against Google by authors and publishers for alleged infringement of their copyrights.

Despite its enormous complexity, the settlement comes down to an agreement about how to divide a pie—the profits to be produced by Google Book Search: 37 percent will go to Google, 63 percent to the authors and publishers. And the libraries? They are not partners to the agreement, but many of them have provided, free of charge, the books that Google has digitized. They are being asked to buy back access to those books along with those of their sister libraries, in digitized form, for an “institutional subscription” price, which could escalate as disastrously as the price of journals. The subscription price will be set by a Book Rights Registry, which will represent the authors and publishers who have an interest in price increases. Libraries therefore fear what they call “cocaine pricing”—a strategy of beginning at a low rate and then, when customers are hooked, ratcheting up the price as high as it will go.

To become effective, the settlement must be approved by the district court in the Southern Federal District of New York. The Department of Justice has filed two memoranda with the court that raise the possibility, indeed the likelihood, that the settlement could give Google such an advantage over potential competitors as to violate antitrust laws. But the most important issue looming over the legal debate is one of public policy. Do we want to settle copyright questions by private litigation? And do we want to commercialize access to knowledge?

I hope that the answer to those questions will lead to my happy ending: a National Digital Library—or a Digital Public Library of America (DPLA), as some prefer to call it. Google demonstrated the possibility of transforming the intellectual riches of our libraries, books lying inert and underused on shelves, into an electronic database that could be tapped by anyone anywhere at any time. Why not adapt its formula for success to the public good—a digital library composed of virtually all the books in our greatest research libraries available free of charge to the entire citizenry, in fact, to everyone in the world?

Monday, November 22, 2010

Yes, yes, the internet is a big bad thing, now can we move on?

Another day, another NYT piece, on growing up digital. (For the rest, see here.)

It's always the same old stuff: our brains are changing, the number of distractions has exploded with the internet! Blah blah.

But has it? It doesn't seem to me, at least from the piece, that it was all that easy when I was growing up either. There were always TV shows, there were always friends, there were always story-books. Studying is always hard when you're a kid; that's what parents are for: to make kids study!

My point is: kids always have difficulties studying. The Internet has not exacerbated anything. If it wasn't the internet, it would be video games. Or television. There's always something. Let's not get carried away.

It's always the same old stuff: our brains are changing, the number of distractions has exploded with the internet! Blah blah.

But has it? It doesn't seem to me, at least from the piece, that it was all that easy when I was growing up either. There were always TV shows, there were always friends, there were always story-books. Studying is always hard when you're a kid; that's what parents are for: to make kids study!

My point is: kids always have difficulties studying. The Internet has not exacerbated anything. If it wasn't the internet, it would be video games. Or television. There's always something. Let's not get carried away.

Friday, November 19, 2010

Journalists and the web

One of my pet peeves with newspapers today is that even with all the ink (and tears!) that get shed about how the web has changed the dynamics of the newspaper industry -- and may end up transforming the publishing culture completely -- newspapers still don't use the web as it should be used. The most important affordance of the web is linking -- and newspapers still don't link in their online articles.

So, I am always happy when an odd journalist does do that. Here is A. O. Scott in his review of the Deathly Hallows (to be fair, this is not the first time Scott has linked):

The always-solid David Leonhardt links too.

So, I am always happy when an odd journalist does do that. Here is A. O. Scott in his review of the Deathly Hallows (to be fair, this is not the first time Scott has linked):

The movie, in other words, belongs solidly to Mr. Radcliffe, Mr. Grint and Ms. Watson, who have grown into nimble actors, capable of nuances of feeling that would do their elders proud. One of the great pleasures of this penultimate “Potter” movie is the anticipation of stellar post-“Potter” careers for all three of them.Click on the link to see where it leads! Heh.

The always-solid David Leonhardt links too.

Wednesday, November 17, 2010

On Government 2.0

Tom Slee's twin posts on Government 2.0 (here and here) are definitely worth your time.

Tom is a little more pessimistic than I would be (although his pessimism is the principled, rigorous type, which is always welcome).

Quickly, these are his main objections:

But point 2 also strikes me also as too broad. It strikes me that the same thing can be said of the deployment of any technological framework; its use can only be made by those who have the skills and the cultural capital to access it. Again, like everything else, it is all contextual. One could argue that Government 2.0 will also make it easy for NGOs, watchdog groups, and so on, to do their job, which in turn may end up helping the underprivileged. As always, everything depends on context.

Tom is a little more pessimistic than I would be (although his pessimism is the principled, rigorous type, which is always welcome).

Quickly, these are his main objections:

Point 2, that information is not always democratizing is well-taken. Slee points out that people who take most advantage of information that is made freely available are those who are already privileged and posses a certain amount of capital (both economic and cultural). He links to this article, which in turn links to this very interesting paper, on how the digitization of Bangalore land records has turned out. Both are also well worth taking a look at (and I should mention that I only skimmed the paper).

- The rhetoric of citizen engagement too often masks a reality of commercialization (last time)

- Information is not always democratizing.

- Information is not always the problem.

- Transparency is an arms race.

- Privacy is the other side of the coin.

- Money flows to Silicon Valley.

But point 2 also strikes me also as too broad. It strikes me that the same thing can be said of the deployment of any technological framework; its use can only be made by those who have the skills and the cultural capital to access it. Again, like everything else, it is all contextual. One could argue that Government 2.0 will also make it easy for NGOs, watchdog groups, and so on, to do their job, which in turn may end up helping the underprivileged. As always, everything depends on context.

Tuesday, August 31, 2010

Scott Rosenberg vs. Nicholas Carr

Scott Rosenberg has a nice post up on his blog explaining why the studies that Nicholas Carr cites to show that web links impede understanding do nothing of the sort. This doesn't completely rebut what Carr is saying, of course, but it does tell us that it's really too soon to know whether links impede or enhance our understanding.

Here's the relevant graf:

Here's the relevant graf:

“Hypertext” is the term invented by Ted Nelson in 1965 to describe text that, unlike traditional linear writing, spreads out in a network of nodes and links. Nelson’s idea hearkened back to Vannevar Bush’s celebrated “As We May Think,” paralleled Douglas Engelbart’s pioneering work on networked knowledge systems, and looked forward to today’s Web.

This original conception of hypertext fathered two lines of descent. One adopted hypertext as a practical tool for organizing and cross-associating information; the other embraced it as an experimental art form, which might transform the essentially linear nature of our reading into a branching game, puzzle or poem, in which the reader collaborates with the author. The pragmatists use links to try to enhance comprehension or add context, to say “here’s where I got this” or “here’s where you can learn more”; the hypertext artists deploy them as part of a larger experiment in expanding (or blowing up) the structure of traditional narrative.

These are fundamentally different endeavors. The pragmatic linkers have thrived in the Web era; the literary linkers have so far largely failed to reach anyone outside the academy. The Web has given us a hypertext world in which links providing useful pointers outnumber links with artistic intent a million to one. If we are going to study the impact of hypertext on our brains and our culture, surely we should look at the reality of the Web, not the dream of the hypertext artists and theorists.

The other big problem with Carr’s case against links lies in that ever-suspect phrase, “studies show.” Any time you hear those words your brain-alarm should sound: What studies? By whom? What do they show? What were they actually studying? How’d they design the study? Who paid for it?

To my surprise, as far as I can tell, not one of the many other writers who weighed in on delinkification earlier this year took the time to do so. I did, and here’s what I found.

You recall Carr’s statement that “people who read hypertext comprehend and learn less, studies show, than those who read the same material in printed form.” Yet the studies he cites show nothing of the sort. Carr’s critique of links employs a bait-and-switch dodge: He sets out to persuade us that Web links — practical, informational links — are brain-sucking attention scourges robbing us of the clarity of print. But he does so by citing a bunch of studies that actually examined the other kind of link, the “hypertext will change how we read” kind. Also, the studies almost completely exclude print.

If you’re still with me, come a little deeper into these linky weeds. In The Shallows, here is how Carr describes the study that is the linchpin of his argument:

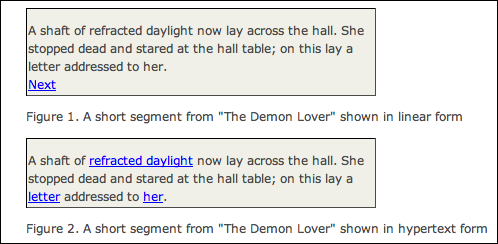

In a 2001 study, two Canadian scholars asked seventy people to read “The Demon Lover,” a short story by the modernist writer Elizabeth Bowen. One group read the story in a traditional linear-text format; a second group read a version with links, as you’d find on a Web page. The hypertext readers took longer to read the story ,yet in subsequent interviews they also reported more confusion and uncertainty about what they had read. Three-quarters of them said that they had difficulty following the text, while only one in ten of the linear-text readers reported such problems. One hypertext reader complained, “The story was very jumpy…”Sounds reasonable. Then you look at the study, and realize how misleadingly Carr has summarized it — and how little it actually proves.

The researchers Carr cites divided a group of readers into two groups. Both were provided with the text of Bowen’s story split into paragraph-sized chunks on a computer screen. (There’s no paper, no print, anywhere.) For the first group, each chunk concluded with a single link reading “next” that took them to the next paragraph. For the other group, the researchers took each of Bowen’s paragraphs and embedded three different links in each section — which seemed to branch in some meaningful way but actually all led the reader on to the same next paragraph. (The researchers didn’t provide readers with a “back” button, so they had no opportunity to explore the hypertext space — or discover that their links all pointed to the same destination.)

Here’s an illustration from the study:

Bowen’s story was written as reasonably traditional linear fiction, so the idea of rewriting it as literary hypertext is dubious to begin with. But that’s not what the researchers did. They didn’t turn the story into a genuine literary hypertext fiction, a maze of story chunks that demands you assemble your own meaning. Nor did they transform it into something resembling a piece of contemporary Web writing, with an occasional link thrown in to provide context or offer depth.Rosenberg also has a nice follow-up post on what it is that links actually end up doing in articles on the web.

No, what the researchers did was to muck up a perfectly good story with meaningless links. Of course the readers of this version had a rougher time than the control group, who got to read a much more sensibly organized version. All this study proved was something we already knew: that badly executed hypertext can indeed ruin the process of reading. So, of course, can badly executed narrative structure, or grammar, or punctuation.

Friday, August 6, 2010

A brief comment about Inception (*no spoilers*)

Just saw Inception today. I liked it a lot but it's an almost textbook illustration of Christopher Nolan's strengths and weaknesses as a director. As is my nature, I will dwell more on the weaknesses, but then I think everyone appreciates the strengths already (the movie's success testifies to that!). So, without any further ado:

Nolan is -- to use the film's own language -- an architect, but not an artist. He's a master at creating concepts, fleshing them out. His films resemble jigsaw puzzles more than anything else, an intricate array of pieces that come together in the end, where the glue that holds the audience is the connection between the pieces even if the pieces themselves are pretty uninteresting.

Nolan is TERRIBLE at action sequences. TERRIBLE. How-do-the-studio-bosses-let-him-get-away-with-it-terrible. That's the biggest problem in Inception: none of the big fight sequences makes any sense whatsoever. By that I mean, there is no sense of time and space, just random cutting between people shooting at each other. Terrible. However, Inception has a far more intricate structure than The Dark Knight, so audiences don't really notice. Still, its incoherent action sequences (and the fact that there are so many of them!) makes Inception just about a so-so movie (as opposed to being a really good one).

Nolan's expository sequences are clunky but his big emotional scenes just don't work. The man clearly needs a good script-writer to make his concepts work.

More points about the movie in general:

Hans Zimmer's score is way over-the-top, distracting and unnecessary. Sometimes I could barely hear what the actors were saying.

The performances are smart but Marion Cotillard's goes into pure awesomeness territory! I have stopped being surprised at how good Joseph Gordon-Levitt is in any role. Oh, and someone needs to give Ken Watanabe a lesson in diction.

Nolan is -- to use the film's own language -- an architect, but not an artist. He's a master at creating concepts, fleshing them out. His films resemble jigsaw puzzles more than anything else, an intricate array of pieces that come together in the end, where the glue that holds the audience is the connection between the pieces even if the pieces themselves are pretty uninteresting.

Nolan is TERRIBLE at action sequences. TERRIBLE. How-do-the-studio-bosses-let-him-get-away-with-it-terrible. That's the biggest problem in Inception: none of the big fight sequences makes any sense whatsoever. By that I mean, there is no sense of time and space, just random cutting between people shooting at each other. Terrible. However, Inception has a far more intricate structure than The Dark Knight, so audiences don't really notice. Still, its incoherent action sequences (and the fact that there are so many of them!) makes Inception just about a so-so movie (as opposed to being a really good one).

Nolan's expository sequences are clunky but his big emotional scenes just don't work. The man clearly needs a good script-writer to make his concepts work.

More points about the movie in general:

Hans Zimmer's score is way over-the-top, distracting and unnecessary. Sometimes I could barely hear what the actors were saying.

The performances are smart but Marion Cotillard's goes into pure awesomeness territory! I have stopped being surprised at how good Joseph Gordon-Levitt is in any role. Oh, and someone needs to give Ken Watanabe a lesson in diction.

Wednesday, July 28, 2010

What's good for me is good for you

Yes, what's good for the publishing industry is also good for us. How could we be so stupid and not know this?

Many would argue that the efflorescence of new publishing that Amazon has encouraged can only be a good thing, that it enriches cultural diversity and expands choice. But that picture is not so clear: a number of studies have shown that when people are offered a narrower range of options, their selections are likely to be more diverse than if they are presented with a number of choices so vast as to be overwhelming. In this situation people often respond by retreating into the security of what they already know.I always love how a "we are under attack and need to survive" argument is never made, instead the argument becomes -- without any self-consciousness whatsoever -- "we are under attack and our demise will result in the decline of civilization and therefore bad for EVERYONE."

[...]

At the Book Expo in New York City, Jonathan Galassi, head of Farrar, Straus and Giroux, spoke for many in the business when he said there is something "radically wrong" with the way market determinations have caused the value of books to plummet. He's right: a healthy publishing industry would ensure that skilled authors are recompensed fairly for their work, that selection by trusted and well-resourced editors reduces endless variety to meaningful choice and that ideas and artistry are as important as algorithms and price points in deciding what is sold.

Friday, July 23, 2010

Algorithmic culture and the bias of algorithms

Via Alan Jacobs, I came across a thought-provoking blog-post by Ted Striphas on "algorithmic culture." The issue is the algorithm behind Amazon's "Popular Highlights" feature. (In short, Amazon collects all the passages in its Kindle books that have been marked, collates this information and displays it on its website and/or on the Kindle. So you can now see what other people have found interesting in a book and compare it with what you found interesting.)

Striphas brings up two problems, one minor, one major. The minor one:

But he brings up another far more important point:

The problem is that this distinction is lost on people who just don't use Google all that much. I spend a lot of time programming and Google is indispensable to me when I run into bugs. So it is fair to say that I am something of an "expert" when it comes to using Google. I understand that to use Google optimally, I need to use the right keywords, often the right combination of keywords along with the various operators that Google provides. I am able to do this because:

I suspect that (1) isn't at all important but (2) is.

But (2) also has a silver lining. In his post, Striphas comments:

Not so with what Striphas calls "elite culture," which, if anything, is far more opaque and far less amenable to this kind of trial-and-error practice. (That's because the actions of experts aren't really rule-based.)

I am not sure where I am going with this and I am certainly not sure whether Amazon's Kindle aggregation mechanism will become as transparent as Google's search algorithm by trial-and-error but my point is that it's too soon to give up on algorithmic culture.

Postscript: My deeper worry is that when we actually reach the point when algorithms are used far more than they are now, the world will be divided into two types of people. Those who can exploit the biases of the algorithm to make it work well for them (like I do PageRank). And those who can't. It's a scary thought although since I have no clue about how such a world will look like, this is still an empty worry.

Striphas brings up two problems, one minor, one major. The minor one:

When Amazon uploads your passages and begins aggregating them with those of other readers, this sense of context is lost. What this means is that algorithmic culture, in its obsession with metrics and quantification, exists at least one level of abstraction beyond the acts of reading that first produced the data.This is true but it could easily be remedied. Kindle readers can also annotate passages in the text and if they feel like it, they could upload their annotations along with the passages they have marked. That should supply the context of why the passages were highlighted. (Of course, this would bring up another thorny question: what algorithm to use to aggregate these annotations.)

But he brings up another far more important point:

What I do fear, though, is the black box of algorithmic culture. We have virtually no idea of how Amazon’s Popular Highlights algorithm works, let alone who made it. All that information is proprietary, and given Amazon’s penchant for secrecy, the company is unlikely to open up about it anytime soon.This is a very good point and it brings up what I often call the "bias" of algorithms. Algorithms, after all, are made by people and they show all the biases that their designers put into them. In fact, it's wrong to call them "biases" since these actually make the algorithm work! Consider Google's search engine. You type in a query and Google claims to return the links that you will find most "relevant." But "relevant" here means something different from the way you use it in your day-to-day life. "Relevant" here means "relevant in the context of Google's algorithm" (a.k.a. PageRank).

The problem is that this distinction is lost on people who just don't use Google all that much. I spend a lot of time programming and Google is indispensable to me when I run into bugs. So it is fair to say that I am something of an "expert" when it comes to using Google. I understand that to use Google optimally, I need to use the right keywords, often the right combination of keywords along with the various operators that Google provides. I am able to do this because:

- I am in the computer science business, and I have some idea of how the PageRank algorithm works (although I suspect not all that much) and

- because I use Google a lot in my day-to-day life.

I suspect that (1) isn't at all important but (2) is.

But (2) also has a silver lining. In his post, Striphas comments:

In the old paradigm of culture — you might call it “elite culture,” although I find the term “elite” to be so overused these days as to be almost meaningless — a small group of well-trained, trusted authorities determined not only what was worth reading, but also what within a given reading selection were the most important aspects to focus on. The basic principle is similar with algorithmic culture, which is also concerned with sorting, classifying, and hierarchizing cultural artifacts. [...]

In the old cultural paradigm, you could question authorities about their reasons for selecting particular cultural artifacts as worthy, while dismissing or neglecting others. Not so with algorithmic culture, which wraps abstraction inside of secrecy and sells it back to you as, “the people have spoken.”Well, yes and no. There's a big difference between the black box of algorithms and the black box of elite preferences. Algorithms may be opaque but they are still rule-based. You can still figure out how to use Google to your own advantage by playing with it. For any query you give to it, Google will give the exact same response (well, for a certain period of time at least). So you can play with it and find out what works for you and what doesn't. The longer you play with it, the longer you use it, the more you become familiar with its features, the less opaque it seems.

Not so with what Striphas calls "elite culture," which, if anything, is far more opaque and far less amenable to this kind of trial-and-error practice. (That's because the actions of experts aren't really rule-based.)

I am not sure where I am going with this and I am certainly not sure whether Amazon's Kindle aggregation mechanism will become as transparent as Google's search algorithm by trial-and-error but my point is that it's too soon to give up on algorithmic culture.

Postscript: My deeper worry is that when we actually reach the point when algorithms are used far more than they are now, the world will be divided into two types of people. Those who can exploit the biases of the algorithm to make it work well for them (like I do PageRank). And those who can't. It's a scary thought although since I have no clue about how such a world will look like, this is still an empty worry.

Monday, July 19, 2010

The problem with the problem with behavioral economics

There's a lot to like in George Loewenstein and Peter Ubel's op-ed in the New York Times on the limits of behavioral economics and it's possible to draw various conclusions from it. But the piece is at heart just a good old-fashioned moral criticism of government (and the Democratic Party) for not doing the "right" thing and indirectly, also of democratic politics in general.

Erik Voeten interprets the authors' argument as "quite damning for the behavioral economics revolution."

Initially at least, costs and benefits were thought to be monetary. Increasingly, we are beginning to realize that we need to factor in culture into these costs and benefits. And while behavioral economics has helped us understand that there are costs and benefits that are not monetary in nature, it has not changed the cost-benefit model itself. In that respect, it has in no way undermined the foundations of economic theory.

As an amazon.com reviewer puts it (scroll down to read):

Erik Voeten interprets the authors' argument as "quite damning for the behavioral economics revolution."

Loewenstein and Ubel’s op-ed is mostly aimed at warning people that behavioral solutions are no panacea. I am only a modest consumer of this research so I cannot evaluate all their claims. Yet, it strikes me that if they are right, their argument is really quite damning for the behavioral economics revolution. Essentially, they assert that traditional economic analysis has ultimately much more relevance for the analysis of major social problems and for finding solutions to them. Behavioral economics can complement this but cannot be a viable alternative. Within political science and other social sciences the insights of behavioral economics are sometimes interpreted as undermining the very foundations of classical economic analysis and warranting an entirely different approach to social problems. At the very least, the op-ed is a useful reminder that careful scrutiny of effect sizes matters greatly.I suspect this is right. But this has far more to do with the disciplinary matrix of economics than it has to do with the insights of the behavioral revolution. I am no economist but as I understand it, the idea that human beings use a certain form of cost-benefit analysis to make economic decisions (homo economicus) forms the cornerstone of traditional economics. According to this theory, people make decisions that maximize benefits and minimize costs. But this, of course, all depends on what counts as a cost and what counts as a benefit, about which the homo economicus model says nothing.

Initially at least, costs and benefits were thought to be monetary. Increasingly, we are beginning to realize that we need to factor in culture into these costs and benefits. And while behavioral economics has helped us understand that there are costs and benefits that are not monetary in nature, it has not changed the cost-benefit model itself. In that respect, it has in no way undermined the foundations of economic theory.

As an amazon.com reviewer puts it (scroll down to read):

At root, the rational actor model says "people act to maximize utility." Utility is left more or less undefined.

Thus, the model really says "people act to maximize something." Or put differently, "There exists a way to interpret human behavior as a maximization of something." Although it does not look like it, this is a statement about our ability to simulate a human using an algorithm. It is on par with the statement that humans are Turing machines.

This model will never lose, because it is very flexible. A challenge to the model will always take the form of a systematic or nonsystematic deviation between some specific "rational actor" model and the true actions of a human. But the challenge will always fail:Being a Kuhnian, I wouldn't say that this research program is the "correct" one. But it's an extraordinarily productive one, that gives its practitioners the ability to construct a variety of problems and solve them. In that respect behavioral economics cannot be -- and never was -- an alternative to it.

- A systematic deviation will by definition always be combatted by enriching the rational actor model to eliminate the deviation.

- A nonsystematic deviation is explainable as "noise" or "something we don't understand yet."

[...] There is really no way to beat the rational actor model, because it is really the outline of a research program, it is not a fleshed-out model. And the research program is the correct one, by the way.

Wednesday, June 30, 2010

Do we save versions on Word?

Ruth Franklin, in a recent short essay in The New Republic -- in turn a reaction to Sam Tanenhaus' essay on John Updike's archives -- makes this strange remark:

My guess is that writing stories on the computer will end up giving us far more drafts than writing on paper ever could.

So -- again -- what on earth is she talking about?

But the computer discourages the keeping of archives, at least in their traditional form. If Updike had been working in Word, he might have left no trace of the numerous emendations to the opening airport scene of Rabbit at Rest, which Tanenhaus carefully chronicles.What on earth is she talking about? Is this how essayists write in Word? With one single file that is endlessly written in again and again until the final draft is ready? Because that's not how my papers get written, at any rate. Each paper that I have submitted to any conference is composed in a series of drafts -- sometimes up to 25 drafts -- all of which are numbered. And I don't think I am alone in this.

My guess is that writing stories on the computer will end up giving us far more drafts than writing on paper ever could.

So -- again -- what on earth is she talking about?

Wednesday, June 9, 2010

Richard Rorty and idea of research

[This post is a little different from others; it summarizes my engagement with the works of the philosopher Richard Rorty. I like Rorty's work a lot but interestingly enough, I find that he helps me less as "research" and more as a guide to understand how to live a good life (on which, more to come some other time). This post argues that Rorty was subtly dismissive of research itself (and his reasons for doing so!) and this, in turn, is why systematic thinkers don't care for him all that much. Why Simon Blackburn, for instance, calls him the "professor of complacency" or why Thomas Nagel doesn't even deign to mention him in The View from Nowhere.]

When I started reading Richard Rorty's magnum opus "Philosophy and the Mirror of Nature," the first thing that surprised me was that Rorty's heroes -- according to him, the three greatest philosophers of the twentieth century: Heidegger, Wittgenstein and Dewey -- were hardly to be found in the book. The book was instead focused almost completely on analytic philosophers: Quine, Sellars, Davidson and Kuhn, among others. Most of Mirror of Nature is occupied with understanding how the notion of the mind (and knowledge) as the "mirror of nature" came to be so widely established, as well as how recent developments have shown that this notion is mistaken. Instead, Rorty argues:

Now these are just the sorts of things that are studied by the sciences of society: sociology and cultural anthropology* (and to a much smaller extent, psychology). This would make cultural anthropology the arbiter of knowledge claims, no? Taking over the status of philosophy and all that?

Well, no, not really. The most irritating thing in Mirror of Nature is the cavalier way Rorty treats his beloved "practices of justification" -- the same ones that he thinks are the key to understanding knowledge and epistemology. Not as a topic of research, not as an area where we are far from having the last word, but as a solved problem, something to be dismissed as just cultural anthropology. Whereas it's far from clear that we have actually figured out just what those practices of justification work.

However, as I read more, I realized what Rorty was trying to do. To remove the pretensions of philosophers (and philosophy) that they stand at the top of the great-chain-of-disciplines is one thing. But it would be counter-productive to do this and then simply replace philosophy with another discipline as THE discipline that adjudicates knowledge claims. So Rorty, preferring to remain true to his non-hierarchical conception of the disciplines, studiously plays down cultural anthropology. Fair enough.

But sometimes, he seems to go further than that, actually disparaging the very idea of research. As an example, consider this sentence:

The passage seems to be doing two things at once. First, it makes a cognitive claim: that, as a topic of investigation, changing one's life by internalizing a self-description should be given no more importance than, say, the change in human life caused by the invention of vaccines. Fair enough. But it also seems to imply that the problem of how human beings internalize a particular self-description is already solved. But is it really? No! So why would Rorty try and hint something like that? [In my copy of Mirror, I have scrawled "But how???" next to this paragraph.]

The key to what Rorty is trying to do lies in a section called "Systematic Philosophy and Edifying Philosophy" (page 365). Here, he points out that there are two types of philosophers. There are systematic philosophers whose aim is to do research; meaning that they are interested in systematically investigating certain issues (say epistemology). Systematic philosophers can be revolutionary, meaning that they can change completely the vocabulary of the field itself. But even these philosophers are committed to a vision of philosophy as research and would presumably like their own vocabularies to be institutionalized. In contrast to these, there are edifying philosophers (or thinkers) who do not attempt to be systematic, are not interested in research per se. Even if they offer some revolutionary thoughts (like, say, the later Wittgenstein), they dread having their own vocabularies institutionalized; they most emphatically do not want to initiate a new research tradition.

(Even I -- as someone generally sympathetic to Rorty's cause -- was riled when I read the line above about conversation degenerating into inquiry.)

You can see this extension being extended to all types of thinkers in general, not just philosophers. By thinkers I mean physicists, biologists, cultural anthropologists, historians, novelists, poets, actors, directors; anyone who expresses herself in a certain way though a certain medium.

The distinction between systematic and edifying thinkers then turns out to be this: systematic thinkers like to understand the world but they aren't very interested in changing it. Or rather, while they may be interested in changing it, they are far more interested in the abstractions that come out of their research. Which is not to say that their research cannot have uses -- nor that it can't change the world -- but just that they aren't interested in it for that reason. Also they are read by only a limited set of people i.e. other specialists. Physicists, chemists, molecular biologists, philosophers, psychologists, sociologists, cultural anthropologists, mathematicians -- every one of us who likes systematic research -- all fall into this category.

As opposed to this, there are edifying thinkers. They too have abstract interests but their work has the capacity to change the world simply because their work is accessible to more people. Novelists, poets, ethnographers, journalists, social workers and above all, politicians, fall into this category. At their best, they broaden the horizons of the human experience, they make us understand and empathize with others, those with whom we couldn't possibly have had any contact otherwise. Edifying thinkers -- along with edifying technologies: the novel, the television program, the play -- are responsible for improving human solidarity and making today's liberal democratic societies possible.

Richard Rorty wants you to be an edifying thinker, not a systematic one. Philosophy and the Mirror of Nature can be looked at as an exhortation to pursue a career where there is more of a possibility to change the world -- if not in an earth-shaking way, then, at least, in a much smaller way. The most astounding thing is that, despite being a book about epistemology, it succeeds. E.g. Matthew Yglesias admits that Mirror "convinced me that fascinating as I find the problems of philosophy to be, it made more sense to spend my time trying to apply the skills I learned thinking about them to the problems of the world than to the problems themselves." I would argue that this is exactly the kind of response that Rorty would have wished for. Certainly Yglesias influences far more people now, writing about politics and public policy as a blogger, than he would have otherwise, even if he had tried to become a Rortyan "edifying philospher."

In his review of Mirror, Ian Hacking argues that Rorty's arguments from Quine, Sellars and Davidson can be taken as against a certain kind of epistemology, one that models knowledge as a static unchanging representations of things that are "out there," but another kind of epistemology is certainly possible that is more holistic and looks at knowledge as a a historical phenomenon that is made possible by (or "constructed") certain changes in the constitution of societies. Indeed, Hacking and Foucault can be considered to be practitioners of this new epistemology.

I don't think Rorty would disagree with that. But he doesn't want us to be doing epistemology, period -- even if it is historical ontology. He wants us to do things that change the world tangibly and becoming practitioners of the new epistemology would do nothing to change the world; as it is a science that is more interested in looking back and then even further back. Hacking's "history of the present," Rorty would argue, doesn't really change the present, except in that it initiates new research programs, all of which, in turn, keep looking backwards!

It is sort of this subtle hostility (but no outright antagonism) to research that Rorty exhibits -- and its all-too-apparent success in weaning off promising researchers e.g. Yglesias -- that I think is the reason why researchy philosophers (Nagel, Searle, et al) are in turn so hostile to Rorty. It's also the main reason that as an epistemologist, I find Rorty to be not-so-useful. Because Rorty doesn't want to promote any research agenda and has a (deliberate) tendency to treat interesting problems (of practices of justification, inter-subjectivity, etc.) as solved or not-so-interesting, his synthesis of Sellars, Quine, Davidson et al is only useful to a point. Once you read him, you can go to all the sources that he points -- Hacking, Davidson, Sellars, Kuhn, Foucault, Heidegger, Dewey -- who agree with Rorty about the Mirror of Nature but unlike him, are also committed to understanding knowledge.

[Rorty, however, is a wonderful philosopher of life, a point that I may address sometime in another post.]

---------------------------------------------------------------------

Endnotes:

* E.g. on page 381 Rorty says:

When I started reading Richard Rorty's magnum opus "Philosophy and the Mirror of Nature," the first thing that surprised me was that Rorty's heroes -- according to him, the three greatest philosophers of the twentieth century: Heidegger, Wittgenstein and Dewey -- were hardly to be found in the book. The book was instead focused almost completely on analytic philosophers: Quine, Sellars, Davidson and Kuhn, among others. Most of Mirror of Nature is occupied with understanding how the notion of the mind (and knowledge) as the "mirror of nature" came to be so widely established, as well as how recent developments have shown that this notion is mistaken. Instead, Rorty argues:

[...] "objective truth" is no more and no less than the best idea we currently have about how to explain what is going on. [...] inquiry is made possible by the adoption of practices of justification and [...] such practices have possible alternatives. They [i.e. these practices] are just the facts about what a given society, or profession, or other group, takes to be good ground for assertions of a certain sort. Such disciplinary matrices are studied by the usual empirical-cum-hermeneutic methods of "cultural anthropology." [Page 385]Consider what Rorty is saying here. The discipline of philosophy has long conceived itself to be an arbiter of knowledge claims. Now typically, all disciplines (psychology, history, geology, physics etc.) make knowledge claims so the job of philosophy (once the physical world had been ceded for explanation to the natural sciences) was to adjudicate between these claims, to put upstart disciplines (say, like sociology or cultural anthropology) in their places and to proclaim the superiority of, say, physics as an ideal for all other disciplines to look up to. But behind this, says Rorty, is the presumption that the mind is a mirror of nature and truth means a correspondence between words and things. Recent developments in analytic philosophy itself -- the work of Quine, Sellars, Davidson, Kuhn -- have led to a breakdown in this idea. Truth is far better described in terms of the practices of justification used by communities of practitioners to "prove" something is true rather than in terms of correspondence, the key question being how communities of human beings (quantum physicists, mathematicians, etc.) with their own methods and cultures decide to agree upon certain things (what counts as evidence, what counts as good evidence and so on).

Now these are just the sorts of things that are studied by the sciences of society: sociology and cultural anthropology* (and to a much smaller extent, psychology). This would make cultural anthropology the arbiter of knowledge claims, no? Taking over the status of philosophy and all that?

Well, no, not really. The most irritating thing in Mirror of Nature is the cavalier way Rorty treats his beloved "practices of justification" -- the same ones that he thinks are the key to understanding knowledge and epistemology. Not as a topic of research, not as an area where we are far from having the last word, but as a solved problem, something to be dismissed as just cultural anthropology. Whereas it's far from clear that we have actually figured out just what those practices of justification work.

However, as I read more, I realized what Rorty was trying to do. To remove the pretensions of philosophers (and philosophy) that they stand at the top of the great-chain-of-disciplines is one thing. But it would be counter-productive to do this and then simply replace philosophy with another discipline as THE discipline that adjudicates knowledge claims. So Rorty, preferring to remain true to his non-hierarchical conception of the disciplines, studiously plays down cultural anthropology. Fair enough.

But sometimes, he seems to go further than that, actually disparaging the very idea of research. As an example, consider this sentence:

To say that we have changed ourselves by internalizing a new self-description [...] is true enough. But this is no more startling than the fact that men changed the data of botany by hybridization, which was in turn made possible by botanical theory, or that they changed their own lives by inventing bombs and vaccines. [Page 386]

The passage seems to be doing two things at once. First, it makes a cognitive claim: that, as a topic of investigation, changing one's life by internalizing a self-description should be given no more importance than, say, the change in human life caused by the invention of vaccines. Fair enough. But it also seems to imply that the problem of how human beings internalize a particular self-description is already solved. But is it really? No! So why would Rorty try and hint something like that? [In my copy of Mirror, I have scrawled "But how???" next to this paragraph.]

The key to what Rorty is trying to do lies in a section called "Systematic Philosophy and Edifying Philosophy" (page 365). Here, he points out that there are two types of philosophers. There are systematic philosophers whose aim is to do research; meaning that they are interested in systematically investigating certain issues (say epistemology). Systematic philosophers can be revolutionary, meaning that they can change completely the vocabulary of the field itself. But even these philosophers are committed to a vision of philosophy as research and would presumably like their own vocabularies to be institutionalized. In contrast to these, there are edifying philosophers (or thinkers) who do not attempt to be systematic, are not interested in research per se. Even if they offer some revolutionary thoughts (like, say, the later Wittgenstein), they dread having their own vocabularies institutionalized; they most emphatically do not want to initiate a new research tradition.

One way to see edifying philosophy as the love of wisdom is to see it as the attempt to prevent conversation from degenerating into inquiry, into a research program. Edifying philosophers can never end philosophy, but they can help prevent it from attaining the secure path of a science.

(Even I -- as someone generally sympathetic to Rorty's cause -- was riled when I read the line above about conversation degenerating into inquiry.)

You can see this extension being extended to all types of thinkers in general, not just philosophers. By thinkers I mean physicists, biologists, cultural anthropologists, historians, novelists, poets, actors, directors; anyone who expresses herself in a certain way though a certain medium.

The distinction between systematic and edifying thinkers then turns out to be this: systematic thinkers like to understand the world but they aren't very interested in changing it. Or rather, while they may be interested in changing it, they are far more interested in the abstractions that come out of their research. Which is not to say that their research cannot have uses -- nor that it can't change the world -- but just that they aren't interested in it for that reason. Also they are read by only a limited set of people i.e. other specialists. Physicists, chemists, molecular biologists, philosophers, psychologists, sociologists, cultural anthropologists, mathematicians -- every one of us who likes systematic research -- all fall into this category.

As opposed to this, there are edifying thinkers. They too have abstract interests but their work has the capacity to change the world simply because their work is accessible to more people. Novelists, poets, ethnographers, journalists, social workers and above all, politicians, fall into this category. At their best, they broaden the horizons of the human experience, they make us understand and empathize with others, those with whom we couldn't possibly have had any contact otherwise. Edifying thinkers -- along with edifying technologies: the novel, the television program, the play -- are responsible for improving human solidarity and making today's liberal democratic societies possible.

Richard Rorty wants you to be an edifying thinker, not a systematic one. Philosophy and the Mirror of Nature can be looked at as an exhortation to pursue a career where there is more of a possibility to change the world -- if not in an earth-shaking way, then, at least, in a much smaller way. The most astounding thing is that, despite being a book about epistemology, it succeeds. E.g. Matthew Yglesias admits that Mirror "convinced me that fascinating as I find the problems of philosophy to be, it made more sense to spend my time trying to apply the skills I learned thinking about them to the problems of the world than to the problems themselves." I would argue that this is exactly the kind of response that Rorty would have wished for. Certainly Yglesias influences far more people now, writing about politics and public policy as a blogger, than he would have otherwise, even if he had tried to become a Rortyan "edifying philospher."

In his review of Mirror, Ian Hacking argues that Rorty's arguments from Quine, Sellars and Davidson can be taken as against a certain kind of epistemology, one that models knowledge as a static unchanging representations of things that are "out there," but another kind of epistemology is certainly possible that is more holistic and looks at knowledge as a a historical phenomenon that is made possible by (or "constructed") certain changes in the constitution of societies. Indeed, Hacking and Foucault can be considered to be practitioners of this new epistemology.

I don't think Rorty would disagree with that. But he doesn't want us to be doing epistemology, period -- even if it is historical ontology. He wants us to do things that change the world tangibly and becoming practitioners of the new epistemology would do nothing to change the world; as it is a science that is more interested in looking back and then even further back. Hacking's "history of the present," Rorty would argue, doesn't really change the present, except in that it initiates new research programs, all of which, in turn, keep looking backwards!

It is sort of this subtle hostility (but no outright antagonism) to research that Rorty exhibits -- and its all-too-apparent success in weaning off promising researchers e.g. Yglesias -- that I think is the reason why researchy philosophers (Nagel, Searle, et al) are in turn so hostile to Rorty. It's also the main reason that as an epistemologist, I find Rorty to be not-so-useful. Because Rorty doesn't want to promote any research agenda and has a (deliberate) tendency to treat interesting problems (of practices of justification, inter-subjectivity, etc.) as solved or not-so-interesting, his synthesis of Sellars, Quine, Davidson et al is only useful to a point. Once you read him, you can go to all the sources that he points -- Hacking, Davidson, Sellars, Kuhn, Foucault, Heidegger, Dewey -- who agree with Rorty about the Mirror of Nature but unlike him, are also committed to understanding knowledge.

[Rorty, however, is a wonderful philosopher of life, a point that I may address sometime in another post.]

---------------------------------------------------------------------

Endnotes:

* E.g. on page 381 Rorty says:

I want to claim, on the contrary, that there is no point in trying to find a general synoptic way of "analyzing" the "functions knowledge has in universal contexts of practical life," and cultural anthropology (in a large sense which includes intellectual history) is all we need.

Friday, May 28, 2010

How we read (articles and magazines) now

In this post, I want to compare the two modes of reading: how we read before the internet and how we read now. I will be limiting the analysis only to news articles and magazines since I believe, pace Nicholas Carr, that it isn't still clear how the internet has changed book-reading habits. But today, the internet IS the platform for delivering news and I believe it has changed our reading habits substantially. (It's also caused a big disruption in the publishing industry.)

Just to be clear, the analysis in this post is based on a sample size of 1: me! But I believe the salient points will hold true for many others.

How we read Pre-internet

Back in the days when there was no internet, we had two newspapers delivered to our home in the morning: a newspaper in English (we alternated between The Times of India or the Indian Express) and one in Marathi (Maharashtra Times or Loksatta). On Mondays we also took in the Economic Times and on weekends, my father would usually buy some more from the news-stand (The Sunday Observer, The Asian Age).

My father would usually read every newspaper from the first page to the last. My mother would usually only read the Marathi newspaper. My sister and I would usually at least skim through the English newspaper but concentrate on a few sections (usually comics, editorials, and sports).

Even in India, where newspapers are generally smaller, (i.e. less number of pages compared to, say, the tome that is the daily New York Times), a newspaper would try to cover everything it thought needed to be covered. So while the bulk of it would be devoted to national politics, there would be a smattering of world news, book reviews, entertainment and sports news, etc. No issue would be covered in too much depth (because of lack of space, which in turn corresponded to the high cost of newsprint) and the issues covered would be chosen keeping in mind the broad preference of a newspaper's readers.

So if you were interested European Union politics, you would be lucky to get an article every two weeks. But at the same time, the very fact that newspapers felt that they were the only way we got content, they did feel obligated to at least sample the whole spectrum of topics. E.g. even if the sports pages were dominated by cricket, soccer and tennis, at least once in a while, volleyball and football would be covered.

In the figure above (click to see full figure), I have chosen to represent how we read back then in terms of two orthogonal axes: the breadth of reading (X-axis) and the depth of reading (Y-axis). The breadth of reading corresponds to the topics that we could cover, the depth of reading corresponds to how much we could cover on a certain topic. Each line represents a topic; how long the line is corresponds to how deeply a topic is covered.

The characteristics of pre-internet reading were:

How we read now

Reading on the internet is different.

There's far far more material to read, and it's easy to get to, requiring nothing more than a click of the mouse.

There's also more control over the material meaning you can decide what you want to read and ignore the stuff that you don't care for. E.g. if you are interested in economic policy you can subscribe to the Business feed of the NYT and nothing else. If you're interested only in foreign policy and particularly in the U.S.-China relationship, you can use Yahoo Pipes and set up filters that will bring to you only those articles that mention the U.S. and China.

Finally, there's more depth. A newspaper, even an online newspaper, can only devote so much time and space to U.S.-China relations. But on the internet, this material can be augmented with the many blogs and wikis out there. There are blogs by anthropologists and economists, by foreign policy and international relations experts, and by political scientists, -- you name it! -- all of whom are interested in conveying their point of view to both lay and specialized audiences. In other words, you can choose to specialize in whatever you want and your specialization will be world-class.

In the figure above (click to see full figure), I've tried to represent the characteristics of online reading in terms of its breadth and depth. The characteristics of internet reading are

In the figure above (click to see full figure), I've tried to represent the characteristics of online reading in terms of its breadth and depth. The characteristics of internet reading are

Just to be clear, the analysis in this post is based on a sample size of 1: me! But I believe the salient points will hold true for many others.

How we read Pre-internet

Back in the days when there was no internet, we had two newspapers delivered to our home in the morning: a newspaper in English (we alternated between The Times of India or the Indian Express) and one in Marathi (Maharashtra Times or Loksatta). On Mondays we also took in the Economic Times and on weekends, my father would usually buy some more from the news-stand (The Sunday Observer, The Asian Age).

My father would usually read every newspaper from the first page to the last. My mother would usually only read the Marathi newspaper. My sister and I would usually at least skim through the English newspaper but concentrate on a few sections (usually comics, editorials, and sports).

Even in India, where newspapers are generally smaller, (i.e. less number of pages compared to, say, the tome that is the daily New York Times), a newspaper would try to cover everything it thought needed to be covered. So while the bulk of it would be devoted to national politics, there would be a smattering of world news, book reviews, entertainment and sports news, etc. No issue would be covered in too much depth (because of lack of space, which in turn corresponded to the high cost of newsprint) and the issues covered would be chosen keeping in mind the broad preference of a newspaper's readers.

So if you were interested European Union politics, you would be lucky to get an article every two weeks. But at the same time, the very fact that newspapers felt that they were the only way we got content, they did feel obligated to at least sample the whole spectrum of topics. E.g. even if the sports pages were dominated by cricket, soccer and tennis, at least once in a while, volleyball and football would be covered.